Product & UX/UI Design at Sedai

Node Optimization

Background

Sedai is an autonomous cloud optimization SaaS product that helps businesses reduce cloud spend and wastage by optimizing storage & compute resource allocations. Companies integrate their cloud resources into Sedai, which Sedai then analyzes for opportunities to optimize and save.

This project focused on refining a core feature of Sedai’s platform: node optimization for Kubernetes and ECS clusters. The Node Optimization flow was initially built and pushed by engineering teams without undergoing UX design, due to last-minute customer deadlines. This meant the initial feature’s user experience and narrative wasn’t as strong as it could have been, and this project demonstrates advocating for the importance of thoughtful user experience design to meet business goals and keep users happy. Revisiting the node optimization UX became essential, as it is a major selling point for Sedai.

What the heck is node optimization?

Node optimization involves adjusting cloud infrastructure configurations to improve performance and resource efficiency. Think of it like making sure all the toys in a toy box are neatly organized so there’s more space and everything is easy to find and use. This is analogous to what Sedai does for customers’ node infrastructure: identifies ways to save space (CPU & memory) then organizes the toy box for you (automatically executes recommendations).

Why It Matters

At Sedai, our commitment to data-driven design ensures that every feature we develop delivers meaningful impact. Here’s why optimizing node configurations was essential for our business goals:

Boosting Customer Acquisition

According to the RightScale State of the Cloud Report, around 30-45% of cloud spend is estimated to be wasted due to underutilized resources, misconfigurations, or overprovisioning. Many companies overspend simply because they lack optimization in how they allocate and use cloud resources.

Based on IDC Research, about 60% of cloud users have reported challenges with cost management and optimization, indicating a high demand for tools and solutions that can help streamline cloud spend.

Gartner estimates that by 2025, 70% of enterprises using cloud infrastructure will actively seek cloud cost optimization tools to manage their expenditures and improve efficiency.

Strengthening Customer Retention

21of 24 Sedai customers have Kubernetes or ECS clusters integrated into Sedai. 11 of these companies are Fortune 500 companies.

Across the 21 companies with clusters connected to Sedai, there are over 200 clusters. Sedai has identified opportunities to optimize for 90% of these clusters.

18 customers have expressed confusion related to the Node Optimization flow.

Problem Statements

Enhancing node optimization began with defining problem statements for the current experience and interface. This involved analyzing all current screens related to node optimization to identify areas of inconsistency or stumbling blocks for users.

Key Understanding

There are three types of clusters Sedai can optimize: Karpenter Kubernetes, Non-Karpenter Kubernetes, and ECS. For the sake of following along, you don’t need to know the differences between them; you simply need to know that they are different.

Problem Statements

The node optimization flow is needlessly and dramatically different than flow for other types of Sedai-offered optimizations.

The experience is inconsistent across the three types of clusters.

The current UI stacks node recommendations vertically, making it unclear which recommendations correlate to which existing node groups.

The usage analysis of existing node groups is not shown in conjunction with the recommendation (in other words, the why is not paired with the what )

For Kubernetes clusters, it’s unclear what the toggle next to Recommended means.

For Kubernetes clusters, the status column displays incorrect and meaningless information.

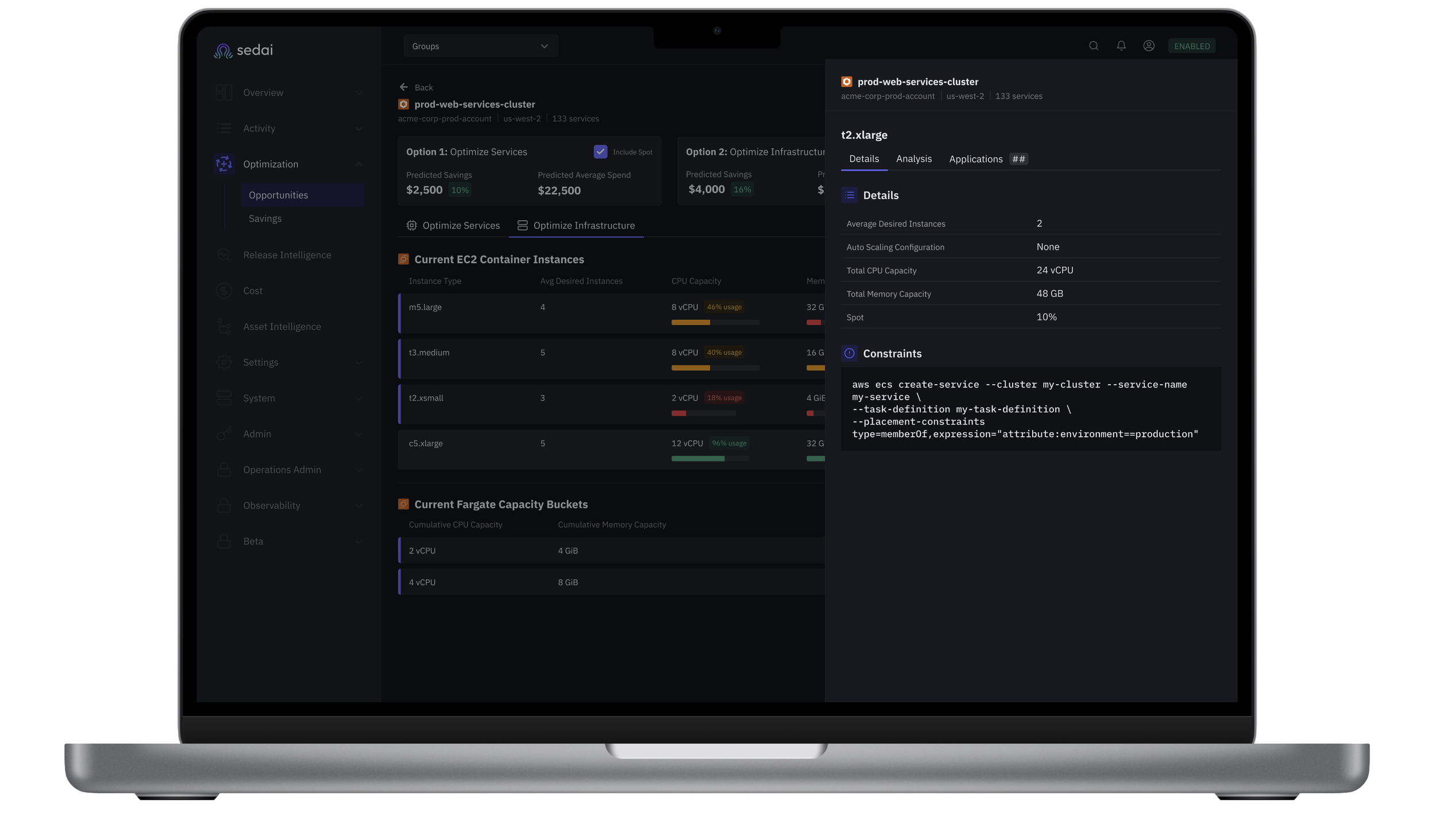

For ECS clusters, EC2 & Fargate recommendations look as if they can be mixed and matched, when really, they cannot be.

When showing counts of nodes/instances, it’s unclear if it’s an average or a point-in-time count.

A demonstration of the current Node Optimization flow for the three cluster types demonstrates the inconsistency and confusion caused by the current flow.

Research

Due to the complexity of node optimization, which varies across the three types of clusters, my research focused heavily on understanding the nuances of the feature itself. I used a combination of resources, including tools like ChatGPT and scheduled discussions with in-house experts. Asking the right questions was essential to refining the user experience and identifying specific optimization needs across clusters. Additionally, coordinating with the three cluster teams—who typically work independently—was crucial to align our vision for the node optimization journey and ensure consistent display of key information across all cluster types.

This A/B tests helped determine whether or not users prefer to see the node group name and instance type in the same column. 66% of respondents preferred to see them in separate columns.

Here’s an example of how I identified granular questions for the Sedai cluster teams. This process clarified the representation of specific data in the UI and revealed inconsistencies in how data was displayed across different cluster types.

When asked about Sedai’s first version of the node optimization flow, a user kindly provided this feedback to inform the UX revisions.

Defining the Scenarios

It quickly became apparent through my research that the main problem statement for the node optimization flow is that the experience is inconsistent across the different cluster types. To address this, I defined the different scenarios that needed to be accounted for in the UX flow.

A/B Testing

As part of our research, we conducted A/B testing in areas where customers had previously expressed confusion. Our agile UX team at Sedai actively monitors the product chatbot, observes customer calls, and keeps an eye on community Slack channels to identify these small but significant areas needing improvement.

To gather user feedback, we often send A/B tests and prototypes to internal team members. Since our primary user persona is a site reliability engineer, our product is designed for technology power users. With nearly everyone at Sedai being a cloud engineer, we leverage this expertise by testing with our colleagues, allowing us to conduct user research without overwhelming our customers with surveys and prototypes. Our typical internal testing approach involves selecting a developer who is familiar with the project’s content but hasn’t participated in its development, minimizing potential bias in the feedback. Here are some A/B tests we conducted for this project:

This A/B tests helped determine if users prefer to see Auto Scaling configurations as a range or as a minimum and maximum. 66% of responded preferred the range.

This A/B tests helped determine which typeface should be used for node group names. 100% of respondents preferred using a monospace typeface, as this is typical in other cloud management products.

Prototype A/B Testing

After identifying problem statements & gathering user feedback, I crafted two clickable prototypes to send to five test users. The prototypes were both specific to Karpenter Kubernetes Clusters yet included design decisions that would impact all three cluster types. Concept A gives minimal information on the actual recommendation in the main screen, which prompts the user to open a side drawer and see the recommendation details and analysis. Conversely, Concept B shows elements of the recommendation directly on the main page.

Concept A

Four of the five test users preferred Concept A. Users also provided the additional feedback:

Showing both types of recommendations (the Existing count of node pools, as well as the Recommeded count of node pools) feels overwhelming.

It is helpful to see a count of applications in each node group or node pool.

It’s preferred to see the names of instance types on the main page when possible (as opposed to showing “Mixed” when multiple instance types are in use).

For Non-Karpenter Kubernetes cluster, it’s helpful to see usage analysis on the main page to give users an indication of why Sedai is recommending an optimization.

Concept B

Final Design

Iterations were made based on user feedback, and I finalized the following prototype to be handed off for UI development:

Solutions

The final design successfully created a consistent node optimization experience where possible across the three cluster types by incorporating the following changes:

Instead of listing current and recommended node groups in the main page, list only existing node groups. This better aligns with how Sedai display others types of recommendations.

Show similar information where possible across the three cluster types and ensure data is consistent. For instance, the new design ensures 30 days of analysis data is shown, whereas in the old design, the analysis period varied across cluster types.

Provide users an indication of which node groups have optimization recommendations by using a purple stroke (a typical pattern in the product that had not yet been introduced to node optimization).

Consolidate the analysis and recommendation into one side drawer to created a more cohesive view on what Sedai is recommending and why.

Extracted the optimal recommendation from the main page and moved it to a side drawer, as to not be confused with the executable recommendations on the main page.

Removed the optimization statuses, since they are out-dated, unclear, and essentially meaningless

For ECS clusters, correctly correlated EC2 recommendations and Fargate recommendations, instead of implying the two can be intermixed

Karpenter Kubernetes Cluster Screens

Non-Karpenter Kubernetes Cluster Screens

ECS Cluster Screens

Thanks for following along!

Thank you for taking the time to explore my design process. If you're interested in a more detailed discussion or have any feedback, please fill out the contact form. As an experience designer, I’m all about feedback, so I’d love to hear your thoughts on my portfolio!